Beyond the Hype: AI Can't Solve Software's Essential Complexity

Why we're still wrestling with the same software werewolf

Introduction: The Werewolf of Software complexity is not impressed by AI

Of all the monsters who fill the nightmares of our folklore, none terrify more than werewolves, because they can transform unexpectedly from the familiar into horrors. For these, we seek bullets of silver that can magically lay them to rest. The familiar software project has something of this character […], usually innocent and straightforward, but capable of becoming a monster of missed schedules, blown budgets, and flawed products. So we hear desperate cries for a silver bullet, something to make software costs drop as rapidly as computer hardware costs do.

— Fred Brooks, No Silver Bullet-Essence and Accidents of Software Engineering

Nearly four decades later, Fred Brooks’s 1986 insight is still spot-on—and remember, this is the mastermind who steered IBM’s legendary OS/360, the 1960s mega-project that pioneered distributed operating systems.

Advances in software engineering have largely targeted accidental difficulties, solving problems like string manipulation, floating-point math, and memory management with increasingly powerful and abstract languages. Large Language Models (LLMs) are the latest in this line of progress, but they are not a silver bullet.

The Essential Complexity of Software

Despite the grand visions touted by tech luminaries like Sam Altman, Dario Amodei, and Mark Zuckerberg who all say that LLMs will eventually code all by themselves, the reality of executing major software projects tells a far more sobering story. Professor Bent Flyvbjerg provides a crucial reality check, noting that even if 40% of IT projects succeed on budget, the other 60% fail spectacularly, with an average cost overrun of 450%.

This isn’t just an abstract statistic. Look no further than Canada’s notorious Phoenix pay system, which is careening towards a 1000% budget overrun (from 300 millions estimated to 2.6 billions spent). And before you blame amateur management, consider this: the project was under the supervision of an industry titan, IBM.

Professor Bent Flyvbjerg derived a power law for cost overruns in IT projects. In a similar manner, Fred Brooks noticed the communication channels between workers grow quadratically with the number of workers, which made him conclude that the slow-down of a project would be proportional to the square of the workforce you add to it. Depressing, isn’t it?

More isn’t always better. Agentic AI is in the hype, but maybe putting more AI agents to work would not help much if they behave like humans…

It is really hard to make a big software project converge to its final form; divergence is the norm. I have seen many times projects growing in size and noticing the rate of regression bugs increasing to a point where new features are dwarfed by the amount of issues they introduce.

How We Tackle Complexity Traditionally

Despite all this, we can’t solve large problems without a large team; this is when complexity begins to creep in.

We know that FAANG companies are notoriously selective in their hiring processes because they understand that a few individuals can cause a promising project to derail or diverge.

Software engineering is about creating abstractions that are as leak-proof as possible, minimizing the effects between unrelated components, and keeping those components as loosely coupled as possible. It is a constant battle to keep complexity down and simplicity up.

So, Why All the Hype?

What “accidental difficulties” are LLMs solving that have stumped previous technologies? The list is remarkable:

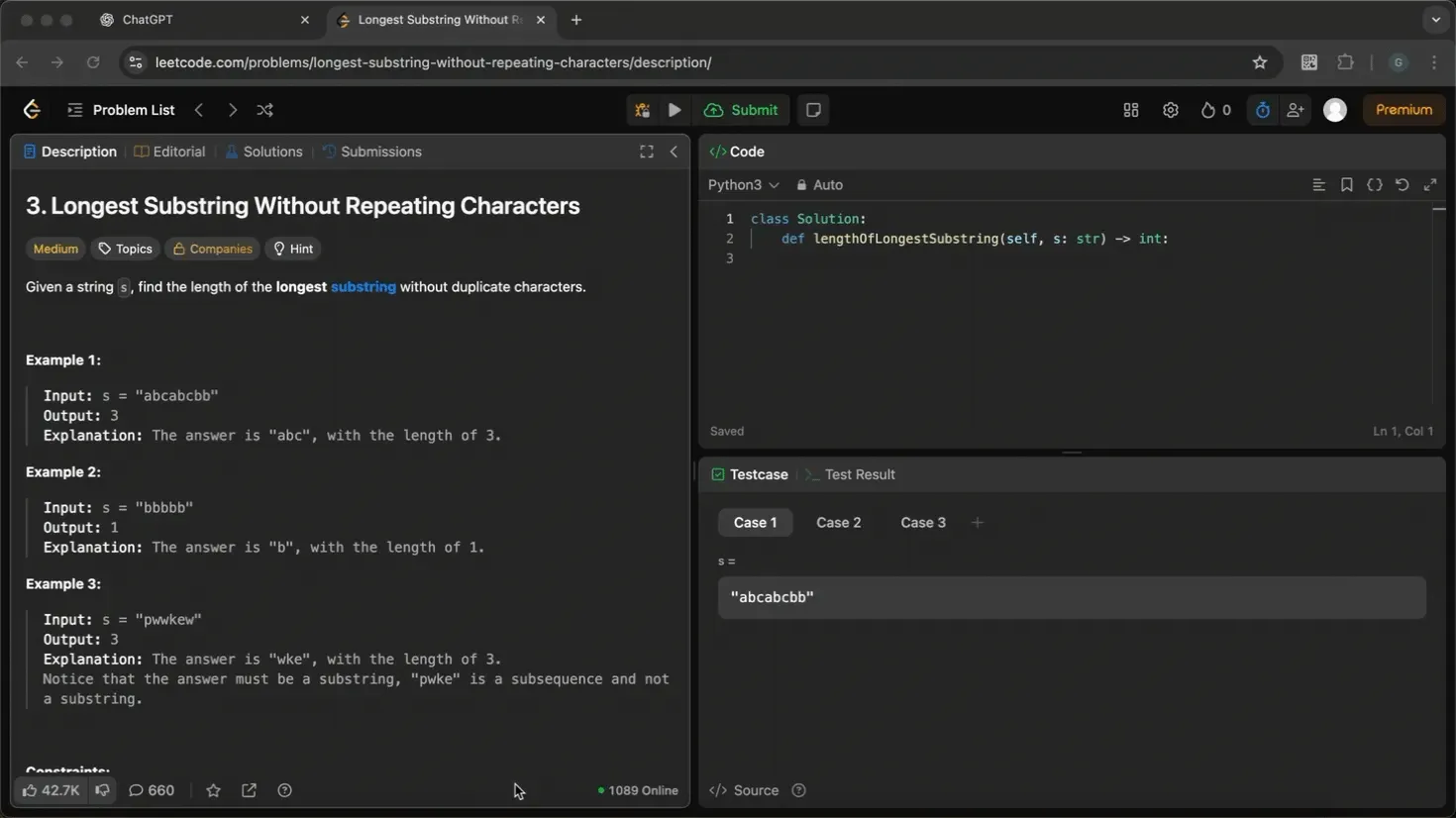

- Solving Algorithmic Challenges: I’ve watched ChatGPT solve medium-difficulty LeetCode problems in a single prompt. More complex, “hard” problems—the kind that truly test seasoned engineers—can often be solved with just a few prompt iterations. Historically, proficiency at this level was a ticket to a high-paying job at a top tech company. Don’t believe me? See this one about finding the longest substring without repeating characters:

I didn’t even bother to copy the problem text, just throw a screenshot of it and it solved it in one shot.

-

Designing Data Structures: LLMs have internalized the canon of classical data structures. They demonstrate a remarkable ability to combine these structures creatively, tailoring solutions to fit the specific needs of a problem.

-

Performance Optimization: I’ve personally seen ChatGPT transform slow SQL queries into versions that run ten times faster. This power isn’t limited to general coding. A former colleague, Operations Research professor Jean-François Côté, notes that his students use AI to reformulate complex linear programming models into more efficient, well-established variations.

-

Intuitive Refactoring: Deterministic refactoring tools need precise instructions. In contrast, you can give an LLM a high-level command like, “Convert this conditional logic into inheritance,” and it will execute the change in perfect Martin Fowler fashion—no need to manually define scopes, parameters, or conditions.

And the list goes on. The hype is there because LLMs can solve a vast range of the accidental difficulties in software engineering, and that is a huge development.

Conclusion

However, as the Nobel prize winner in Computer Science for its work on deep learning, said:

We won’t reach AGI by scaling up LLMs.

— Yann LeCun, https://www.youtube.com/watch?v=4__gg83s_Do

LeCun also noted that we need mental models and abstractions to navigate the world. Hierarchical thinking helps us do this by splitting a large task, like a trip from New York to Paris, into a series of simpler steps. We don’t have to think about every single detail at once, like how we’re going to leave our house or which street to take. We can abstract away those details and concentrate on a simpler goal, like “get to the airport.”

He claims LLMs can’t do this, which is a major shortcoming, IMHO. That’s because this skill is fundamentally what software engineering is all about. Software engineering isn’t just about algorithms or data structures—it’s the art of building layers of abstraction that work together to solve complex problems through emergent properties of the system.

Lastly, let’s say we succeed scaling up LLMs to an AGI or make them think hierarchically. Unfortunately, intelligence alone is not enough to slay the werewolf of software complexity. Great software engineers have a deep-rooted fear of complexity and an aggressive drive to eliminate it. You need this kind of survival instinct to deal with large projects, you need scars; how to make LLMs more fearful when they generate code? Software constructs are cathedrals of abstraction that no one completely understands. You can’t just throw code at them; you need a more humble approach and constantly refactor your thinking of the system you deal with. Does all this fit in a LLM context window?